6 Relevance feedback and query expansion

Query refining

Synonymy has an impact on the recall of most information retrieval systems

independent of the query

dependent on the query

Two types

Global methods

Expanding or reformulating query terms independent of the query

Local methods

Adjusting a query relative to the documents that initially appear to match the query

The types of Global methods

Query expansion/reformulation with a thesaurus or WordNet

Query expansion via automatic thesaurus generation

Techniques like spelling correction

The type of Local methods

Relevance feedback

Pseudo-relevance feedback, also known as Blind relevance feedback

(Global) indirect relevance feedback

6.1 Relevance feedback

Relevance feedback is one of the most used and most successful approaches

The base idea

It may be difficult to formulate a good query when you don’t know the collection well

Seeing some documents may lead users to refine their understanding of the information they are seeking

The approach of RF

The user issues a (short, simple) query

The system returns an initial set of retrieval results

The user marks some returned documents as relevant or not relevant

The system computes a better representation of the information need based on the user feedback

The system displays a revised set of retrieval results

An example of RF

Image search provides a good example of relevance feedback, which is a domain where a user can easily have difficulty formulating what they want in words, but can easily indicate relevant or nonrelevant images

http://nayana.ece.ucsb.edu/imsearch/imsearch.html

Instructions

Browse: If the first page displayed doesn't include any interesting images, click browse to see the next page

Search: Once you find some initial images you are interested, click on them to select and press search

Iterate: After the search results are displayed, select/unselect more relevant images and click search

The system is based on relevance feedback and it learns while you select more images and iterate

6.1.1 The Rocchio algorithm for RF

The classic algorithm

The models based on VSM

Relevance feedback can improve both recall and precision

But, in practice, it has been shown to be most useful for increasing recall

The underlying theory

We want to find a query vector, that maximizes similarity with relevant documents while minimizing similarity with nonrelevant documents

If Cr is the set of relevant documents and Cnr is the set of nonrelevant documents, then we wish to find:

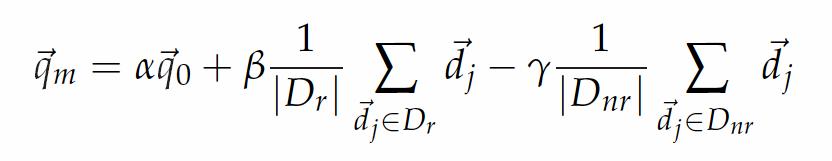

Under cosine similarity, the optimal query vector for separating the relevant and nonrelevant documents is:

The optimal query is the vector difference between the centroids of the relevant and nonrelevant documents

The key is getting the full set of relevant documents and the full set of nonrelevant documents based on users’ feedback

Rocchio algorithm

此主题相关图片如下:

此主题相关图片如下:

q0 is the original query vector

Dr and Dnr are the set of known relevant and nonrelevant documents respectively

α,β and γ are weights attached to each term

Rocchio algorithm

Positive feedback also turns out to be much more valuable than negative feedback, and so most IR systems set γ < β

Reasonable values might be α = 1, β = 0.75, and γ = 0.15

Ide dec-hi

Another alternative is to use only the marked nonrelevant document, which |Dnr| = 1, which has the most consistent perform

The first assumption of RF

The user has to have sufficient knowledge to be able to make an initial query which is at least somewhere close to the documents they desire

Cases where relevance feedback alone is not sufficient include:

Misspellings

Mismatch of searcher’s vocabulary versus collection vocabulary

Laptop VS. notebook computer

Cross-language information retrieval

Documents in the same language cluster more closely together

The second assumption of RF

The term distribution in all relevant documents will be similar to that in the documents marked by the users

The term distribution in all nonrelevant documents will be different from those in relevant documents

This approach does not work well if the relevant documents are a multimodal class

Subsets of the documents using different vocabulary, such as Burma vs. Myanmar

A query for which the answer set is inherently disjunctive, such as Pop stars who once worked at Burger King

Instances of a general concept, which often appear as a disjunction of more specific concepts

for example, felines

Good editorial content in the collection can often provide a solution to this problem

For example, an article on the attitudes of different groups to the situation in Burma could introduce the terminology used by different parties thus linking many document clusters

Google Sets

http://labs.google.com/sets

6.1.2 The disadvantage of RF

Not necessarily popular with users

Users are often reluctant to provide explicit feedback

User do not wish to prolong the search interaction in general

The long queries that are generated by RF are inefficient for a typical IR system

only reweight certain prominent terms in the relevant documents, such as perhaps the top 20 terms by term frequency

RF is mainly a recall enhancing strategy, and web search users are only rarely concerned with getting sufficient recall

6.1.3 Relevance feedback on the web

Similar/related pages feature

Query expansion

Excite web search engine

Clickstream mining for providing indirect RF

Web link structure analysis for providing indirect RF

But provided by page authors rather than readers

Excite web search engine, which initially provided full relevance feedback

For people who used relevance feedback, results were improved about two thirds of the time

However, the feature was in time dropped, due to lack of use

Only about 4% of user query sessions used the relevance feedback option, and these were usually exploiting the “More like this” link next to each result

About 70% of users only looked at the first page of results and did not pursue things any further

6.1.4 Evaluation of RF strategies

Empirically, one round of relevance feedback is often very useful

Two rounds is sometimes marginally more useful

Accordingly, having at east five judged documents is recommended

Evaluation methods

Compute a precision-recall graph

But unfortunately it is cheating

Fairness demands that we should only evaluate with respect to documents not seen by the user

Evaluate based on documents in the residual collection

Unfortunately, the measured performance can then often be lower than for the original query, particularly if there are few relevant documents

It is difficult to validly compare performance with and without RF because the collection size and the number of relevant documents changes

A third method is to have two collections

One is used for the initial query and relevance judgments

The second is then used for comparative evaluation

The best evaluation method is to do user studies

6.1.5 Pseudo-relevance feedback

Also known as blind relevance feedback

The advantage

Assume that the top k ranked documents are relevant, and finally to do RF as before

Evidence suggests that it tends to work better than global analysis( improve about 10%)

The disadvantage

Topic drift

For example, if the query is about copper mines and the top several documents are all about mines in Chile, then there may be query drift in the direction of documents on Chile

6.1.6 Indirect relevance feedback

Less reliable than explicit feedback

More feasible and easy to collect information

DirectHit introduced the idea of ranking more highly documents that users chose to look at more often

6.2 Global methods for query reformulation

6.2.1 Vocabulary tools for query reformulation

By means of a thesaurus or a controlled vocabulary

This includes information about

Words that were omitted from the query

Words that were stemmed to

The number of hits on each term or phrase

Whether words were dynamically turned into phrases

6.2.2 Query expansion

Users give additional input on query words or phrases, which possibly suggested by the IR system

The key is building a thesaurus for query expansion

Use of a controlled vocabulary that is maintained by human editors

Library of Congress Subject Headings

The Dewey Decimal system

An automatically derived thesaurus with word co-occurrence statistics

Query reformulations based on query log mining

Sogou vocabulary

The advantage

Not requiring any user input

Some system can do automatic query expansion with thesaurus

In PubMed system, neoplasms was added to a search for cancer automaticly

Increases recall

Widely used in many science and engineering fields

6.2.3 Automatic thesaurus generation

Automatically generated thesaurus

Methods

Exploit word cooccurrence with text statistics to find the most similar words

Feasible and common

Use a grammatical analysis of the text and to exploit grammatical relations or grammatical dependencies

Advanced but complicated

Computation of co-occurrence thesaurus

We begin with a term-document matrix A, where each cell At,d is a weighted count wt,d for term t and document d

If we then calculate C = AAT, then Cu,v is a similarity score between terms u and v, with a larger number being better

The disadvantages

Tremendous computation

Require dimensionality reduction via Latent Semantic Indexing

Require domain specific thesaurus

The quality of the associations

Term ambiguity easily introduces irrelevant statistically correlated terms

Apple computer may expand to Apple red fruit computer

Not retrieve many additional documents

Since the terms in the automatic thesaurus are highly correlated in documents anyway

The comparison to RF methods

Query expansion is less successful than relevance feedback

Query expansion may be as good as pseudo-relevance feedback

Query expansion have the advantage of being much more understandable to the system user

[此贴子已经被作者于2010-12-14 09:21:12编辑过]

加好友

加好友  发短信

发短信

管理员

管理员

Post By:2008/4/27 7:27:12 [只看该作者]

Post By:2008/4/27 7:27:12 [只看该作者]